Sometimes here at Alley and Lede we half wish that our servers would strain under pressure like they used to. As we’ve written previously, hardware performance improvement has outpaced the demands of even the largest news websites over the past decade. Add in software improvements, especially in databases, where columnar storage systems have supplanted a wide variety of special-purpose tools, in theory, we’ve never had it better! While the servers themselves may not struggle as evidently as they once would’ve, many technology vendors charge by the number of requests, so scale can still be a business problem.

New Old Web is a blog published by Alley and Lede. We're researchers, strategists, designers, and developers who want to make the internet a fun place to live and work.

For example, we recently found ourselves looking at a big potential price increase from an important technology vendor for a large-scale media site because of request volume. When we dug in, it was clear that bot requests presented a big problem, and that they came not from search engines or LLMs, but from some of the client’s own technology vendors.

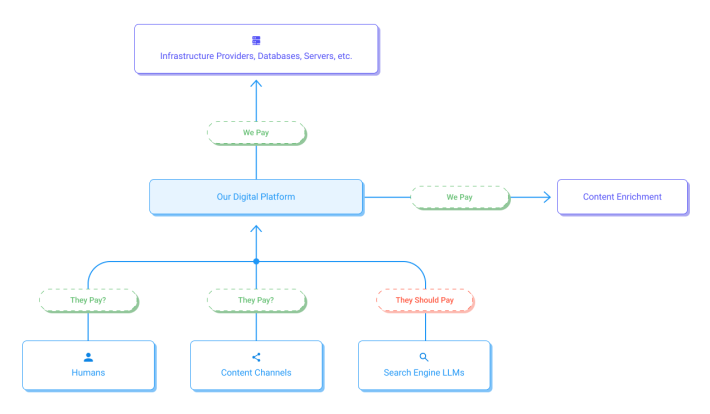

In addition to serving consumers directly, high-traffic news websites sit between upstream technology providers and downstream content distributors. Thus, modern news websites are platforms in their own right, and this customer was populating myriad distribution channels and applications from their website. Some of these tools, like a monetized video player, run directly on the media website. Others, like Google News or Apple News, send content to other systems that users can access directly. Generally speaking, even a very large news website has limited leverage over what happens upstream in its infrastructure providers and downstream in its distribution partners. The technology platforms have lots of other customers, and many downstream channels partner with anywhere from dozens of other media sites, as in a monetized video provider, to approximately all of them, as in Google News.

Media websites, as a general rule, ought to earn money from inbound HTTP requests. Content channels pay revenue shares, users view advertisements or buy subscriptions, and search engines, including LLMs, ought not be an exception, although that’s a topic for another post. When we partner with firms that provide content enrichments, like paywall providers and some flavors of video platforms, we’re typically paying them, but they’re often making HTTP requests anyway to instrument their part of the product.

When we investigated the core problem with our client, we examined their raw traffic logs and found that a third-party vendor for certain content features was additionally not obeying our cache-control rules. As developers, we too often think of HTTP headers, meta tags, and robots.txt files as doctrine for user agents. But it’s easy to imagine a developer of a script that does a basic integration over HTTP, as for this vendor, not bothering to check caching rules, or looking at the headers beyond the status code. We’re also learning that some bot-makers just break the rules outright. Fortunately, we were able to work with the vendor to improve their code.

Just as modern hardware can obscure runaway requests, it can also obscure runaway storage usage. We inherited a site that was driving a WordPress database, which was nearing a terabyte in size and growing rapidly. In some contexts, like photo storage, a terabyte isn’t very interesting anymore. And there may be some cases where a WordPress database should logically require a terabyte of storage. But a single article should need something like 20kb of database storage, so a terabyte seemed suspicious. We found that enhancements to their article editor were causing WordPress to save each post revision twice, which roughly doubled the storage requirements of every single article. We noticed because we had to set up local development environments — absent this requirement, the servers might have happily chugged along using double the disk space.

Our recommendations to find and eliminate performance issues obscured by powerful production hardware are:

- Run your project locally, and don’t ignore quality-of life-issues. Whatever is keeping your machine from serving a request quickly might be slowing down production servers too. Maybe the slowdown is less perceptible, or even nonexistent, in production, but making too many requests or slow requests may present a long-term problem.

- Frame performance issues as business problems. Ten years ago, “the site is down!” was immediately understood as both a business and technology problem. But setting aside actual outages, the cost of inefficiency is no different now than it was then. We pay too much for hardware and waste staff time. If you identify places to save money and time and then fix the underlying issues, you’ll be a hero.

- Coach third parties through shared issues using the business problem as a frame of reference. Use dollar signs if you can! Often, the costs we bear are mirrored by our downstream partners’ costs; if we have to pay our provider per response delivered, they may be paying theirs per request made.

- Instrument your systems responsibly, and make it the first step of onboarding any new system. In large-scale media sites, the line between engineering and site reliability keeps getting blurrier. Ask yourself if your job title will keep you from having to pitch in during an outage, and then go have a look at the server logs and dive into New Relic — now, before finance gets the next server bill.

- Diagram your upstream and downstream dependencies, and then go look for the magnitude of each dependency on your platform.

You don’t have to be an enterprise media site for the advice above to be valuable. A little detective work now can ensure your growth is economical. Server costs should scale less than linearly with traffic, regardless of the scale of the site. Our client was able to avoid a possible six-figure increase in their server utilization, our developers and theirs were able to work more efficiently — all because of a little bit of old-fashioned log sleuthing.