Robots.txt is a common code format that allows website owners to instruct and direct crawlers, scrapers, spiders, and other automated systems that identify themselves as a unique user agent. Once used to green or red light search engines from accessing a site’s content, publishers are now relying on robots.txt for something completely new: Managing web crawlers that collect information from their sites that is then used to train AI models or read websites on behalf of users during chat sessions with external LLMs. These bots can benefit users, introducing them to valuable information they might otherwise not encounter, furthering an organization’s mission to spread their ideas. But there are clear drawbacks for most publishers who rely on traffic to their digital platforms to build an audience and drive revenue. AI bots deliver value directly from the publisher to the user, often synthesizing the work of many publishers and other resources. Credit may not be given where credit is due.

By convention, bots should follow the instructions in robots.txt. Bots don’t generally announce what kind of bot they are beyond the user agent, but the Dark Visitors index of bots helpfully categorizes different bots by type.

Unlike conventional search engines, which frequently re-crawl sites to ensure that they have up-to-date information, most AI models cannot easily “forget” what they have already learned. While a change to robots.txt might take days or weeks to propagate through Google’s search index, it may be many months before OpenAI or Anthropic releases a new model based on training data gleaned from its most recent indices. And to the extent that these models use previous training or synthetic data from earlier models as a foundation for new models, it may be entirely too late for technical changes to make any impact.

So we wanted to know: What have publishers done about this?

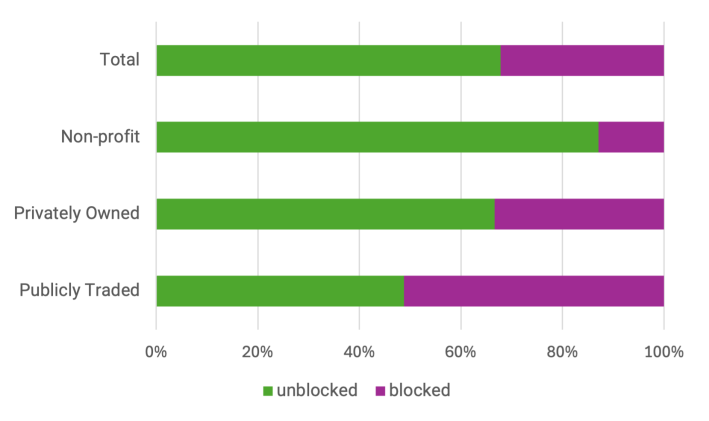

In an analysis of 5,818 English-language media websites’ robots.txt files, only 13% of non-profit news organizations block any AI bot, compared to 51% of publicly traded media companies and 33% of privately held media companies. 32% of all sites in our index block at least one AI bot.

New Old Web is a blog published by Alley and Lede. We're researchers, strategists, designers, and developers who want to make the internet a fun place to live and work.

This suggests that most media companies overall have either decided not to block AI bots, or have not yet considered the question. Larger privately held media firms are also more likely to block AI than their smaller counterparts, so publicly traded may also be a proxy for size. These companies are more likely to have the resources to carefully consider the tradeoffs between allowing AI bots or not, and to take action. Publicly owned media organizations often own several digital brands, and some notable conglomerates own hundreds, so centralized control over technology systems may also play a role. But even compared to privately owned media websites, non-profit sites block far fewer AI bots.

Media companies fully or partially blocking any AI bot, by business type:

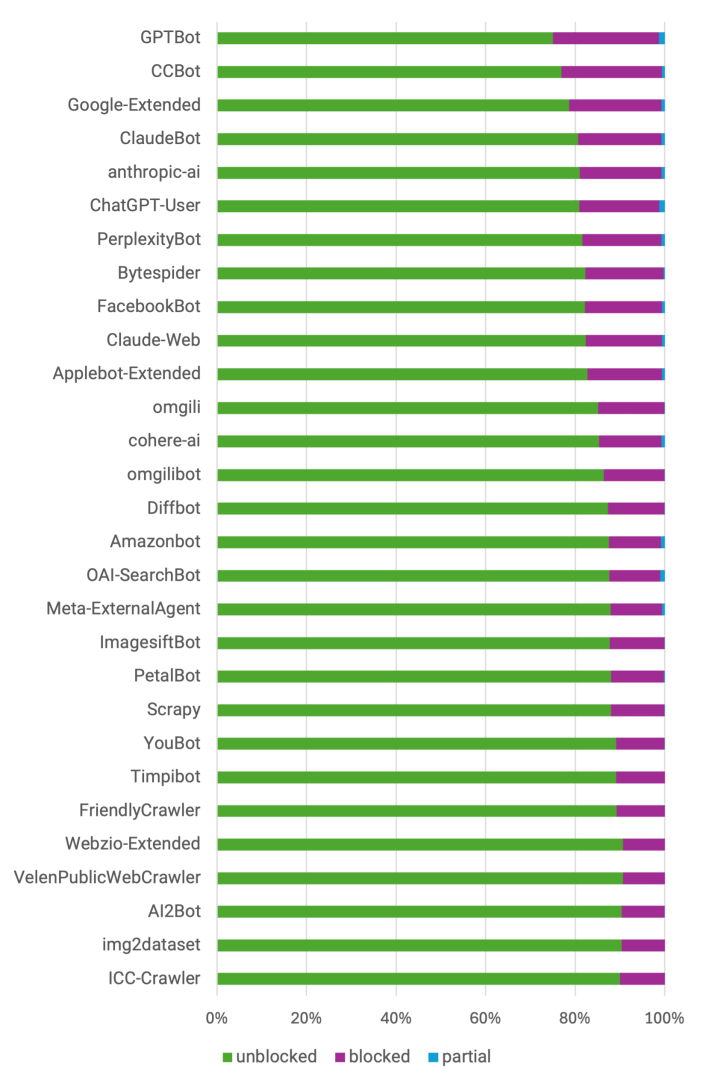

GPTBot is most blocked

OpenAI’s GPTBot is the most commonly disallowed AI bot, closely followed by CCBot. 29% of all sites in our index block GPTBot and 27% block Common Crawl’s CCBot. Common Crawl is a nonprofit that provides an open repository of website data that several companies have used to train their LLM models. 24% block Google-Extended, which is a user agent that Google announced in 2024 that, when blocked, prevents Google from using the content found for AI training purposes. Anthropic’s ClaudeBot and anthropic-ai user agents are blocked by about 21% of sites in our index. While there may in some cases be business reasons for blocking OpenAI and allowing Anthropic, it seems more likely that news organizations created these robots.txt entries in the early days of LLMs. A high-ranking 2023 blog post advises organizations on how to prevent AI crawlers from gathering images and text from a website. The post only suggests blocking GPT-Bot and CCBot and does not mention any others. The top 30 bots blocked in our index are all identified by Dark Visitors as AI bots; the most-commonly blocked non-AI bot is AhrefsBot, which is an SEO crawler blocked by 8% of sites in our index.

Stay in touch

Sign up for our free newsletter

While ChatGPT is still the leading AI chatbot with about 80% market share, others are gaining ground. The second most-used chatbot is Perplexity, which is blocked by 20% of media sites.

Top 30 fully or partially blocked User Agents:

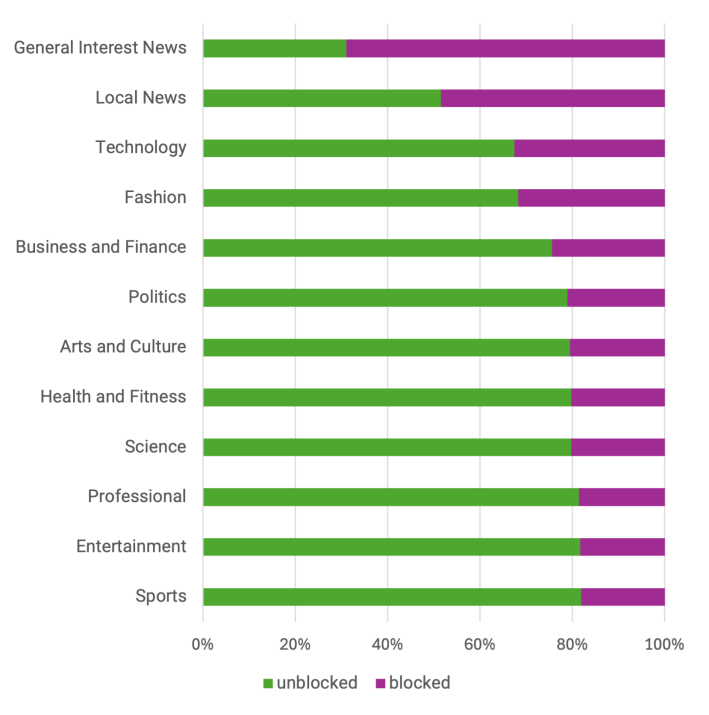

Most general interest sites block AI bots

69% of all general interest news sites block AI bots. These publishers cover a full spectrum of news and typically have a large newsroom with a global footprint. Nearly half of all local news sites block AI bots, but sites that cover specific verticals are less likely to do so, with sports and entertainment sites blocking the fewest AI bots. Many local news sites are owned by conglomerates that employ common platforms, which may explain the relatively higher propensity of these sites to block AI bots.

Why don’t more media sites block AI bots?

There are several possible explanations: Belief in the inevitability of AI as a discovery mechanism; belief that future industrywide payments like Anthropic’s proposed $1.5bn settlement to authors will only be available to those whose sites are indexed; lack of attention to this topic on the part of news organizations or lack of communication between strategic decision makers and site administrators. Until very recently, robots.txt has been viewed as a technical tool to shape bot traffic, not a strategic lever.

The belief in the inevitability of LLMs as major sources of consumer information is logical, and anecdotally supported by some conversations we’ve had with media companies, but it’s not easily provable. Some media companies are noticing rising visits from ChatGPT. The debate over Google’s own algorithm changes to prioritize AI-driven explanations over traditional search results fuels the notion of inevitability, given that Google has historically been a major traffic driver for many sites in our index.

The belief in future AI payments is equally hard to prove — given that the New York Times has both sued OpenAI and blocked it from accessing its site, it’s also possible that some companies will win settlements or enter into licensing agreements for past indexing that occurred before media companies even knew which user agents to block. Other companies have already entered into licensing agreements, and may block bots from companies that have not licensed their content.

Additional qualitative research could provide more detailed answers to this topic, especially regarding whether media sites are implicitly allowing LLMs because they lack an understanding of the problem space or because they have chosen not to.

It’s also possible that some publishers are blocking user agents at a server level. This may be possible to test, and as this type of blocking grows in popularity, we plan to do so.

Is it too late to block?

Conventional search crawlers like Google’s preserve web pages as documents which can be “forgotten” if robots.txt is updated. To effectively answer arbitrary questions conversationally, LLMs store information differently, and once information has been encoded into the neural net that underpins the LLM, it is nearly impossible to remove. Blocking an LLM at this point might prevent new models from training on text found at the blocked URLs, but if a new model encodes information from an older model that had previous implicit permission to train on the site, it may be impossible to extricate the older information from that LLM.

The ethics of LLMs indexing sites without notifying site owners or industry groups of their intentions has been widely debated elsewhere, and various lawsuits detail the thorny issues at the heart of this question. There’s a second more technical question about the sufficiency of robots.txt, which before LLMs, was historically used as a “disallow” tool to prevent rule-following bots from crawling the site and to curate what conventional search engines can access. Robots.txt is inherently an honor system, and malicious bots frequently disguise themselves as conventional web browsers to retrieve any publicly available page. This systemic maladaptation gives rise to CAPTCHA-blocked sites.

The future of robots.txt

The role of this 31-year-old piece of digital infrastructure is shifting rapidly from nuisance prevention to strategic lever. Even though it may be nearly impossible to excise information already encoded in a neural network, media companies could choose to limit access to preserve the originality of newly reported stories. Since many of the sites in our index cover current events, that could be advantageous. One way to accomplish this would be to disallow all bots and then specifically allow non-AI bots. For example, if a media site wanted to stay in Google’s index, it could allow Googlebot to access some or all of its pages.

Methodology and Use of AI

We built a list of 5,818 media websites from a variety of sources, including industry lists. We used several tools, including ChatGPT, to identify and categorize the sites, including how the site is monetized, the primary topic, the business structure, the region, and its primary medium for delivering content. We then validated and edited the list of sites by hand. We retrieved and stored the robots.txt file for each site, detected the blocked user agents, classified them based on a list of known user agents, then processed each text file and analyzed the results in a data science notebook.